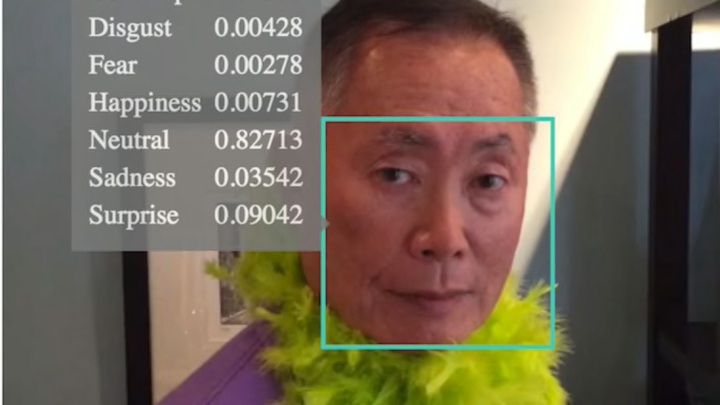

Can you tell how someone is feeling just by looking at their face? Microsoft's Project Oxford team has developed an experimental tool that it says can detect a person's emotional state just by analyzing a photo of his/her expression. Each emotion is given a decimal value to five places, which together equal .99999 and form a numerical representation of complex human feelings.

The emotions that the API claims to recognize are anger, contempt, disgust, fear, happiness, neutral, sadness, and surprise. "These emotions are understood to be cross-culturally and universally communicated with particular facial expressions," reads the MPO website. The tool can recognize and analyze as many as 64 faces in a single image, but it does include the disclaimers that "frontal and near-frontal faces have the best results" and "recognition is experimental, and not always accurate."

Hyperallergic tested the tool to see how it analyzed the emotions of famous works of art. It found that Mona Lisa's smirk was half happiness and half neutral, and it attributed the expression of Dorothea Lange's Migrant Mother (1936) as being mostly neutral with less sadness than the iconic photo actually conveys. Using photos from iStock, YouTube, and Wikimedia Commons, we decided to try the tool for ourselves to see how accurate or inaccurate it could be (note: it does not work on photos of angry cats). Check out the results below, and conduct your own emotional photo experiment on Microsoft's Project Oxford website.

[Public domain], via Wikimedia Commons // Microsoft's Project Oxford

[h/t Hyperallergic]