Over the years, there's been a lot of debate about what should and shouldn't count as a Broadway play or musical. Still, it's widely agreed that, in order to qualify, a production needs to run at a Broadway theater.

In general, a Broadway theater is defined as one that's located in Manhattan and seats at least 500 people. (Actually being located on Broadway is not a requirement.) Those on the island with 100 to 499 seats are regarded as "Off-Broadway" venues. Meanwhile, establishments with 99 seats or fewer are deemed "Off-Off-Broadway."

If the facility hosts concerts and dance shows more often than it does plays or musicals, it isn't considered a Broadway theater, regardless of the seating situation. Because of this, Carnegie Hall doesn't make the cut—even though the main auditorium has way more than 500 seats (2804, to be precise).

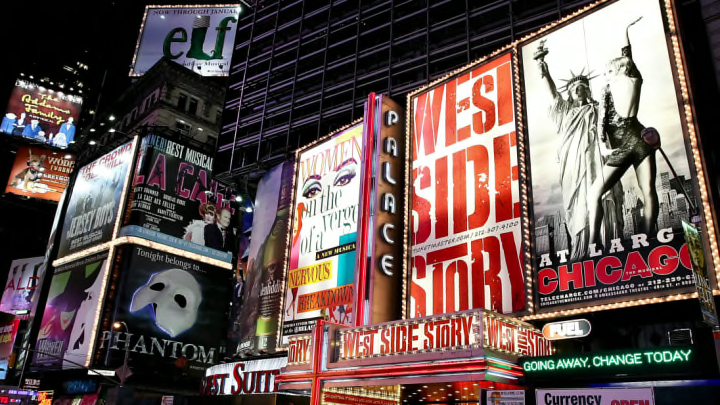

How many Broadway theaters are in Manhattan proper? The industry's national trade association is known as the Broadway League, and, at present, they only recognize 41 legitimate Broadway theaters—with the majority sitting between West 40th and West 53rd Streets in Midtown Manhattan. By comparison, Off-Broadway and Off-Off-Broadway stages are more widely dispersed throughout New York City.

Every year, the Broadway League joins forces with the American Theatre Wing to administer one of the Big Apple's biggest celebrations: The Tony Awards. To be eligible for these prizes, a show must open at a Broadway League-certified Broadway theater at some point in the current season before a designated cut-off date (which for this year was April 25).

Given these rules, the Awards completely ignore Off-Broadway productions. But this doesn't mean that you should. Some of the most popular shows ever conceived started out at Off-Broadway venues. For example, the original production of Little Shop of Horrors opened in 1982 and ran for five years without ever making it to the Great White Way—although a Broadway revival did pop up in 2003.

For many productions, Off-Broadway is a stepping stone. Just a few months after opening up at smaller theaters, Hair, A Chorus Line, and, more recently, Hamilton all made the jump to a Broadway stage.

That transition isn't always easy. Often, new sets have to be built and, sometimes, key players have to be re-cast. Furthermore, as producer Gerald Schoenfeld told Playbill in 2008, the Off-Broadway venue where it all began won't want to be left "high and dry" after the show leaves. "[You'll] probably have to make arrangements with the originating theater," he says, "which probably would require a royalty and possible percentage of net profits."

Broadway productions also come with much higher price tags. When you factor in things like talent fees, rehearsals, and marketing, the average Broadway play costs millions of dollars to produce. An estimate from The New York Times says a Broadway show costs "at least $2.5 million to mount," while larger-scale musicals fall in the $10 million to $15 million range. Playbill broke down the costs for staging the Tony-winning musical Kinky Boots in 2013, which cost $13.5 million to get off the ground.

Unsurprisingly, it's become quite difficult to turn a profit on the Great White Way. According to the Broadway League, only one in five Broadway shows breaks even. Furthermore, those lucky few that actually make money have to run for an average of two years before doing so.

As they say, there's no business like show business …

This story was updated in 2019.