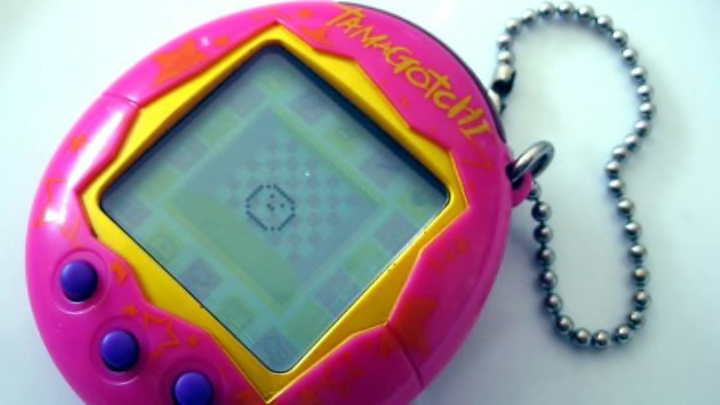

As a child, I was not allowed to have a real pet, so I had to settle for a Tamagotchi. These small handheld devices simulated the chores of a real pet—you had to feed them and clean up their messes—but lacked the fun parts of pet ownership, like having a sentient being you can bond with. A big problem with Tamagotchis was that school tended to get in the way of pet parenting, and my little virtual friend would routinely pass away from neglect. This always made me wonder, how did this piece of plastic know how long I was gone?

Tamagotchis may have been cheap, but they contain the time-keeping hardware of most personal computers: a real-time clock (or RTC). This feature is usually part of a microchip found in the computer motherboard. To tell time, the clock uses a quartz crystal oscillator that creates a stable, periodic electromagnetic signal or vibration. By counting the vibrations, the computer can tell the time. [PDF] The RTC is powered by a very small battery (usually lithium) that keeps it running even when the computer is off. That way, when you turn the computer back on, the time is still set.

Most computers nowadays link up with international standardized time keepers via the Internet, though they still rely on crystal oscillators when not connected. Because of this, the fanciest personal computer—when off the Internet—will tell time no better than a cheap digital watch. “Getting accurate time out of virtual machines is something that has plagued all of the virtual machine providers,” software engineer George Neville-Neil told PCWorld. Tamagotchis included.