Theodore Roosevelt’s fever was approaching 104 degrees, and he was delirious. “In Xanadu did Kubla Khan / A stately pleasure dome decree:” he mumbled. “Where Alph, the sacred river ran / Through caverns measureless to man / Down to a sunless sea.” Then he began again: “In Xanadu did Kubla Khan ...”

The situation was dire. It was early 1914, and the 55-year-old former president—accompanied by his son, Kermit, naturalist George Cherrie, expedition co-commander Colonel Cândido Rondon, and a small team of other Brazilians—was deep in the Brazilian rainforest, attempting to navigate the 950-mile-long Rio da Dúvida, the River of Doubt (and, these days, the Roosevelt River). They were all in rough shape—dirty, malnourished, bug-bitten—but none more so than Roosevelt: He’d been hobbling along since he’d bashed his leg against a rock a few days earlier, and it was getting infected; now, the fever.

As Roosevelt recited poet Samuel Taylor Coleridge's lines over, and over, and over, a storm thrashed the camp. “[F]or a few moments the stars would be shining, and then the sky would cloud over and the rain would fall in torrents, shutting out sky and trees and river,” Kermit wrote. Roosevelt, on a cot, was shivering violently, wracked with chills.

He was given quinine orally, to no avail; it was then injected into his gut. By morning, he had rallied. Still, he was weak, and urged the men to leave him behind. But they refused, and their difficult journey over two sections of rapids continued as Roosevelt’s fever rose again. “There were ... a good many mornings when I looked at Colonel Roosevelt and said to myself, 'he won’t be with us tonight,'” Cherrie would later say. “And I would say the same in the evening, 'he can’t possibly live until morning.'”

Roosevelt had suffered from recurring bouts of what he called Cuban fever since his days as a Rough Rider during the Spanish-American War. But what he was actually suffering from—and would ultimately survive when he emerged from the Brazilian rainforest—was malaria.

This microscopic protozoan, which is transmitted by female Anopheles mosquitoes, has wreaked havoc for millennia: Carl Zimmer writes in his book Parasite Rex that malaria has, by some estimates, killed "half of the people who were ever born." Three percent of all humans are infected every single year, and according to Zimmer, malaria fells one person every 12 seconds. In 2016, the parasite infected approximately 216 million people and killed 445,000. Most who die are children under 5.

Those who survive malaria can experience problems like kidney or lung failure and neurological deficits. The Centers for Disease Control and Prevention (CDC) estimates that the direct costs of malaria—traveling for treatment, buying medicine, and paying for a funeral, for example—are at least $12 billion annually, and that “the cost in lost economic growth is many times more than that.” (One study, published in 2001, noted that the economies of “countries with intensive malaria grew 1.3 percent less per person per year, and a 10 percent reduction in malaria was associated with 0.3 percent higher growth.”) But the parasite’s influence reaches beyond death tolls and monetary losses.

Dr. Susan Perkins, American Society of Parasitologists immediate past president and malaria research scientist at the American Museum of Natural History, has thought a lot about how infectious diseases and parasites have changed history. While pathogens such as typhus, spread by lice, have destroyed armies (Napoleon’s men, for example, were pummeled by the disease) Perkins says it’s impossible to speculate how, exactly, it changed history. But in the case of malaria, the picture is more clear. “If you go way back to what makes us human,” Perkins says, “I don’t think there’s much question there in terms of [what is the] most influential parasite.”

Malaria indirectly spawned the environmental movement, led to the formation of an agency devoted to public health, contributed to the extinction of bird species in Hawaii, and has shaped the course of human evolution, and is now compelling scientists to explore high-tech solutions—straight out of science fiction—that could save millions of lives.

Humans have known malaria for a long time—in fact, it existed well before we did, and probably infected even the dinosaurs. The disease (or at least one like it) was described 4000 years ago, in Chinese medical texts; Ancient Egyptian mummies, entombed 3500 years ago, harbored the parasite. Malaria even popped up in literature, notably in the works of Shakespeare (the Elizabethans called it ague: “Here let them lie / Till famine and the ague eat them up,” Macbeth intoned).

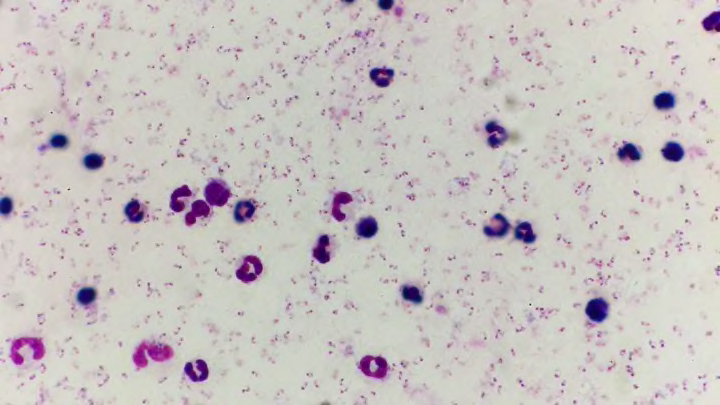

The name, first used around 1740, comes from the Italian words mal and aria, literally, “bad air”—a throwback to a time when it was thought foul air caused the disease. It wasn’t until the 1880s that French army surgeon Charles Louis Alphonse Laveran discovered what would later be called Plasmodium parasites wiggling in the blood of a patient; it would take another 17 years for British officer Dr. Ronald Ross, a member of the Indian Medical Service, to demonstrate that mosquitoes transmitted the disease.

Four species of malaria commonly infect humans: Plasmodium falciparum, P. vivax, P. ovale, and P. malariae. P. falciparum leads to the most severe infections, and the most deaths; P. vivax is the most common, and has a greater paroxysm: a very high fever, followed by severe, wracking chills. “There’s an old saying,” says Dr. Jane Carlton, whose New York University lab focuses on the comparative genomics of parasitic protozoa, including P. vivax. “If you have falciparum malaria, you could die. If you have vivax malaria, you wish you were dead.”

Malaria is found in tropical and subtropical areas around the globe, in more than 100 countries. According to the CDC, “The highest transmission is found in Africa south of the Sahara and in parts of Oceania such as Papua New Guinea.”

Plasmodium’s life cycle is complicated, but starts when a female Anopheles mosquito feeds on an infected human. The mosquito will pick up malaria gametocytes, the sexual stage of the parasite. If the mosquito picks up both male and female gametocytes, they will fuse in the insect’s gut to produce sporozoites, an immature form of the parasite. “Those sporozoites then migrate through the mosquito and concentrate in the salivary glands,” says Dr. Paul Arguin, Chief of the Domestic Response Unit in the CDC’s Malaria Branch. “When the mosquito takes its next meal, the parasites get injected into the person.”

The sporozoites travel through the human body and eventually infect liver cells, where they grow and change—“kind of like a caterpillar into a butterfly,” Arguin says. These new stages are called merozoites; they leave the liver cells and go into the bloodstream, where they infect red blood cells, devour hemoglobin, and reproduce until the many new parasites burst out and start infecting more red blood cells. This rupturing causes some of the symptoms associated with malaria, which generally start seven to 30 days after the bite from the mosquito (but in two species, the parasite can also lie dormant in the liver and cause a relapse at a later time).

Anyone can get malaria, according to the CDC, but those especially at risk are “people who have little or no immunity to malaria, such as young children and pregnant women or travelers coming from areas with no malaria.” Ninety percent of malaria deaths occur in sub-Saharan Africa. And regardless of which strain of malaria you’ve contracted, things can get bad quickly. “All species [of the parasite] can cause severe malaria,” Arguin says. “The hallmark symptom is high fever and shivering, shaking chills—that’s what most people are going to experience first.” Based on the body’s reaction and how much malaria is in the system, a variety of symptoms might follow—muscle aches and tiredness, sometimes vomiting and diarrhea, and, thanks to the destruction of red blood cells, anemia and jaundice.

“Malaria can go throughout the body and cause all sorts of problems,” Arguin says. “If the parasites start to aggregate in the brain, it’s a syndrome called cerebral malaria where the person will become comatose, develop seizures, [and] eventually [suffer] brain damage and death. It can cause kidney failure, can prevent you from breathing.” If diagnosed and treated right away, malaria can be cured in about two weeks. But if an infection becomes severe and isn’t treated quickly, death might not be far behind.

No one knows how to create a villain like Disney, and in 1943, the company set its sights on the Anopheles mosquito in The Winged Scourge. In the short film—created in partnership with the Office of the Coordinator of Inter-American Affairs and meant to be shown in Latin America—the insect was dubbed “Public Enemy Number 1,” a “tiny criminal” wanted for “willful spreading of disease and theft of working hours. For bringing sickness and misery to untold millions in many parts of the world.” At that point, “many parts of the world” included America, where malaria was endemic in 13 southeastern states. Efforts to eradicate it would have a hand in the formation of two government agencies.

In 1942, the government created the Office of Malaria Control in War Areas to tackle the problem; in 1946, it became the Communicable Disease Center, the forerunner of the CDC. By 1951, malaria had been eliminated from the U.S. According to Arguin, the elimination was the result of a number of things happening simultaneously: Hydroelectric dams and roads were built, and areas of standing water were drained; there was a post-war economic boom; human populations shifted from rural areas into cities; scientists started diagnosing, treating, and tracking cases of the disease; and insecticides like dichloro-diphenyl-trichloroethane, or DDT, were sprayed in mosquito-plagued areas. The U.S. also had the benefit of having “some of the wimpiest Anopheles,” Arguin says. “It was very easy to get them under control to levels where they were not able to sustain transmission effectively.”

Today, the CDC handles everything from alcohol abuse and the flu to Tourette’s and Zika, and its focus is global. The agency devotes $26 million to parasitic diseases [PDF], which it fights both in the lab and on the ground. (Each year, the CDC also receives millions of dollars—the amount varies slightly from year to year—from USAID to help co-implement the President’s Malaria Initiative.) There are typically 1700 cases of malaria in the United States every year, most them originating from people who have traveled to a country with the parasite. The team in the Malaria Branch, which consists of 120 people, provides “a 24/7 hotline to assist doctors, nurses, pharmacists, and labs with the diagnoses and treatment of malaria here in the U.S.,” Arguin says. “We sometimes provide medicine. We validate and develop new tests for malaria. [But] the majority of our activities are focused in the malaria-endemic countries—we support control programs around the world.”

In the 1960s, the science advisory committee—one of several that would go on to become the modern Environmental Protection Agency—was formed to study the effects of the pervasive use of pesticides in response to Rachel Carson’s Silent Spring. Especially under fire was DDT, the popular insecticide which, since the 1940s, had been one of the most effective weapons in the fight against malaria-carrying mosquitoes. Through the spraying of DDT, malaria had an indirect impact on the environment: DDT killed songbirds, who ingested the neurotoxin when they ate earthworms. The pesticide poisoned all life it came into contact with—fish, aquatic life, and land animals and insects—and spread through the food chain. Bald eagles, peregrines, and brown pelicans began laying eggs with weak shells that either broke before hatching or failed to hatch at all, causing their populations to decline nearly to the point of extinction. It got into the atmosphere, traveling far from the places it had been sprayed, even showing up in melting Arctic ice. It persists in the soil, and may stay there for decades.

In humans, exposure to high doses of DDT caused “vomiting, tremors or shakiness, and seizures,” according to the CDC [PDF]. The pesticide caused liver damage, as well as miscarriages and birth defects. In 1997, a team of scientists analyzed past data and linked DDT to premature births; according to one researcher on the team, “the insecticide could have accounted for 15 percent of infant deaths in the U.S. in the 1960s,” New Scientist reported in 2001. Based on studies in animals, the EPA notes that “DDT is classified as a probable human carcinogen by U.S. and international authorities.”

The use of DDT was banned in the U.S. in the 1970s. Today, under the Stockholm Convention on persistent organic pollutants (POPs), DDT is allowed to be used only as malaria control—and then only as a last resort—and it’s sprayed in homes and buildings in some countries where malaria is endemic. Its use, however, remains controversial: Though it was initially effective in killing mosquitoes, DDT turned out to be only a short-term solution—one that came with an unintended consequence. In just a few decades, DDT created pesticide-resistant mosquitoes that spread malaria with ease.

Humans aren’t the only species to host malaria parasites. There are hundreds of species of malaria, infecting everything from lizards and turtles to white-tailed deer and birds. And no matter what host they’re infecting, the parasites always leave a mark.

Take, for example, what happened in Hawaii. The island chain was a mosquito-free zone until 1826, when Culex quinquefasciatus arrived in water barrels from Mexico carried by the ship Wellington. Invasive species carrying Plasmodium relictum, which causes avian malaria, also made their way to Hawaii, and it was a recipe for disaster: P. relictum doesn’t normally kill birds, but Hawaii’s avians had no natural immunity. According to Michael D. Samuel, professor emeritus of forest and wildlife ecology at the University of Wisconsin, the P. relictum parasite, carried by Culex mosquitoes, sent roughly a third of the island’s brilliantly colored honeycreeper species—important pollinators and seed spreaders [PDF]—the way of the dodo, and “helped wipe out another 10 avian species, including extinction of the poʻouli during this century." Climate change and habitat destruction, he tells Mental Floss, exacerbate the problem. "When temperatures rise, the mosquito population can increase and can move further up mountainsides into honeycreeper habitat, putting most of the remaining honeycreepers at risk of extinction."

But even when it isn’t causing outright extinction, a malaria parasite can affect an animal’s overall fitness. “In one of two systems that was really well studied, female lizards that had malaria laid fewer eggs,” Perkins says. According to a study published in Science in 2015, great reed warblers with avian malaria “laid fewer eggs and were less successful at rearing healthy offspring than uninfected birds.” And recently published research on farmed white-tailed deer fawns in Florida found that “animals that acquire malaria parasites very early in life have poor survival compared to animals that remain uninfected.”

Sometimes, though, the effects of malaria go deeper—all the way down to its host’s DNA. The Science study showed that avian malaria shortened infected birds’ telomeres, a compound structure at the end of the chromosomes that protect their DNA. The shorter the telomeres, the shorter a bird’s lifespan—and female birds can pass those short telomeres down to their offspring. In other words, malaria is altering the course of bird evolution.

It has shaped human evolution, too. A number of blood disorders have evolved as a direct result of malaria, and these genetic mutations make some people better equipped to survive infection.

Take sickle cell disease, a blood disorder caused by a gene mutation of the oxygen-transporting protein hemoglobin, the malaria parasite’s preferred meal. Carriers of the genes for sickle cell disease have a mutated form of hemoglobin—what’s called hemoglobin S (HbS)—that can actually help a person resist malaria. “The malaria parasite cannot ingest the hemoglobin S as efficiently as it can normal hemoglobin,” Arguin explains.

It’s classic natural selection: Over the course of thousands of years, malaria killed off people who had normal hemoglobin. People who are carriers of the sickle cell trait, however, survived and passed down the resistant genes, which, over the course of generations, have become widespread. In areas of Africa that are severely affected by malaria, as much as 40 percent of the population carries at least one HbS gene.

There is a catch-22, of course. While hemoglobin S fends off malaria in carriers, it also means that a person’s descendants, if they inherit the gene from both parents, have a greater chance of dying from sickle cell disease. Those who suffer from the disease experience symptoms from jaundice to swollen hands and feet to extreme tiredness. They have “pain crises”—severe pain that is sometimes chronic—and the disease eventually harms organs including the brain, spleen, heart, kidneys, liver, and more. According to the National Heart, Lung, and Blood Institute, the only cure is a blood and bone marrow transplant, which only a few people afflicted with the disease are able to have. Often, those with sickle cell disease die an early death. The hemoglobin S adaptation came with an evolutionary trade-off that now has dire consequences for hundreds of thousands of people.

“Many of the blood disorders—or hemoglobinopathies, as we call them—have been shaped by malaria parasites because anything that protects people against malaria is going to be selected for in that population,” Carlton says. Those disorders include sickle cell, as well as alpha and beta thalassemia (both of which reduce the production of hemoglobin, though the latter almost exclusively effects males), G6PD deficiency (a condition that causes red blood cells to break down), and the Duffy binding antigen.

“Many people in African countries are what’s called Duffy negative—they don’t have this particular receptor on certain cells in their body that Plasmodium vivax needs in order to invade red blood cells,” Carlton says. “Once that [Duffy negative gene] was selected for and swept through the human population in Africa, it actually forced the P. vivax species out of Africa.”

Unlike the Anopheles mosquitoes that populate the United States, the species in other parts of the world are deadly efficient transmitters of malaria, and the traditional methods of control—insecticide-treated bed nets, spraying in houses, diagnoses, and treatment—can only go so far. Bed nets develop holes; mosquitoes develop resistance to insecticides; antimalarial drugs that travelers take are prohibitively expensive in endemic countries. Meanwhile, attempts to create a malaria vaccine face a number of challenges.

For one, the human immune response is really only just beginning to be understood. “It’s very complex,” Carlton says. “If you don’t know how the human immune system develops immunity to the malaria parasite, it’s very difficult to try to mimic it.” One hurdle is that the parasite is quick to switch up the surface proteins that allow our immune system to identify it as an outsider and kill it (a process known as antigenic variation). “In order to have a vaccine you would need to cover all of the possible [surface proteins] that we know of, plus any rearrangements [the parasite] might come up with,” Perkins says. “That’s been really hard to do.”

One vaccine has been developed, however, and will be deployed in three African nations in 2019. RTS,S involves piggybacking a piece of the malaria parasite on a hepatitis virus vaccine, which is then injected into a person “so that the immune system will recognize that and react,” Arguin says. In clinical trials, RTS,S prevented four out of 10 cases of malaria, so it's not a cure-all—but, as Alena Pance, a scientist at the Wellcome Sanger Institute, told CNN, even “40 percent is better than no protection at all.”

Despite the development of the vaccine, some scientists are looking for solutions at the atomic level—even in the parasite’s own DNA.

Scientists in one study have identified the genes that prevent one malaria parasite—the deadly Plasmodium falciparum—from growing in human blood, which they hope will aid the development of new vaccines and preventative drugs. Another team of scientists has used cryo-electron microscopy to map the first contact between P. vivax and human red blood cells at the atomic level, allowing them to learn just how the parasite latches onto red blood cells.

Other scientists are exploring options that sound like something out of a sci-fi movie. In 2017, scientists at UC Riverside used the gene editing system CRISPR to tweak the DNA of mosquitoes so that they had “an extra eye, malformed wings, and defects in eye and cuticle color.” The next step is to use gene drives “to insert and spread genes that suppress the insects while avoiding the resistance that evolution would typically favor.”

Scientists at the research consortium Target Malaria are hoping to use gene drives to take on the Anopheles mosquitoes most efficient at transmitting malaria. Gene drives override normal inheritance patterns; in a lab setting, they increase the likelihood that a set of genes will be passed down to offspring from 50 to 99 percent. According to Vox, scientists could use suppressive, propagating gene drives to tweak the genetic code of malaria-spreading mosquitoes to ensure that all their offspring are male (only females bite and spread malaria), which could eventually cause those species to die out.

MIT biologist Kevin Esvelt, who in 2013 was the first to realize the potential of CRISPR gene drives, tells Mental Floss that this method can “invade most populations of the target species”—in this case Anopheles gambiae, A. coluzzii, and A. arabiensis mosquitoes—“everywhere in the world.” (Esvelt’s lab is also developing local drives, which—unlike the self-propagating drives being discussed for malaria-carrying mosquitoes—are designed to stay in a particular environment because they’re built to lose their ability to spread over time.)

In theory, suppressive gene drives could be deployed soon—“if there were some kind of emergency and one absolutely needed to do it, we could pretty much do it,” UC San Diego professor Ethan Bier, who was part of a team that created a gene drive targeting the Anopheles stephensi mosquito, told Vox—but the scientific community is proceeding with caution. They need input from the communities where the mosquitoes would be released, not to mention regulatory approval.

Target Malaria hopes to have field testing approved for gene-edited mosquitoes by 2023. As a first step, they plan to release sterile male mosquitoes in Burkina Faso this year, just to show local communities what their work is like—and that there’s nothing to fear. Next, they’ll release a type of mosquito called an “X-shredder,” which is genetically modified to create mostly male offspring. This would cause the female population to temporarily plummet, thereby reducing malaria transmission. Only later would they consider releasing a self-propagating gene drive that would wipe out the three targeted mosquito species—and, hopefully, most of malaria with it.

If all goes well, Esvelt says, malaria-carrying mosquitoes could be the first species targeted by gene drive technology. “It’s certainly the furthest along,” Esvelt says. A successful demonstration could lead scientists to use the technology to battle schistosomiasis, a chronic disease spread by parasitic worms that, according to the CDC, is “second only to malaria as the most devastating parasitic disease.” More than 200 million people were treated for schistosomiasis in 2016.

According to Esvelt, suppression drives could control any disease spread by a vector or parasite—in theory. But he doubts we’ll ever get there. “The barriers to use are primarily social rather than technical,” he says. “Apart from malaria and possibly schistosomiasis, there are no plausible applications in public health for ‘standard’ gene drive systems that will affect the entire target species; the challenge of securing agreement from all countries affected by, say, dengue is simply too great.”

And the ethics, of course, are complicated.

“If we gain the power to change the world, we become responsible for the consequences whether or not we decide to use it,” Esvelt says. “Today, flesh-eating New World screwworm maggots are devouring millions of South American mammals alive, causing agony so excruciating that human victims often need morphine before doctors can even examine them. That is a wholly natural phenomenon that has been going on for millions of years. We could use [a] suppression drive to prevent that suffering. If we choose to do so, we are responsible for all the consequences, intended and unintended. If we don't, we are responsible for the suffering of every animal devoured alive by screwworms from that day forwards. Who are we, how do we relate to other creatures, and what is our purpose here on this Earth? Technological advances will force us to decide.”

In the time it has taken you to read this far, roughly 80 people have died of malaria.

“The latest data … shows that globally, we are at a crossroads,” WHO Director-General Dr. Tedros Adhanom Ghebreyesus said in a video message played at the inaugural Malaria World Congress held in Melbourne, Australia in July 2018. While malaria death rates worldwide have fallen more than 60 percent since 2000, the parasite’s drug resistance is a serious problem. So, too, is the mosquito’s growing resistance to popular insecticides. “Progress has stalled and funding has flatlined,” Ghebreyesus said. “[We] neglect malaria at our peril.”

In July 2018, the FDA approved a new drug that Teddy Roosevelt would have dreamed of: Krintafel, which treats those who have been previously infected with malaria. It specifically targets Plasmodium vivax, which has a dormant liver stage and can recur years after transmission.

Krintafel is the first new malaria drug in a long time, says Perkins, “despite the fact that scientists have been working intensively on malaria for over 100 years.” And we can’t come up with new treatments fast enough.

“Every time a new medicine has been invented to treat malaria, parasites start developing ways to resist it,” Arguin says. “While we still have some very effective medicines to prevent and treat malaria, new drug development must continue so that replacement medicines will be ready when our current ones need to be retired. On every front, including diagnosis, treatment, prevention, and control, there is a need for continued vigilance and progress to ensure that the tools needed for malaria elimination will be available and effective.”

Still, while there are plenty of challenges, Arguin is optimistic. “There are some parts of the world that are experiencing great successes,” he says. “I know [eradication] is possible, and it’s definitely worthwhile. But in some parts of the world, it is not going to be easy.”