Mind reading used to belong to the realms of sci-fi books and comic strips. But in 2011, a team of scientists from UC Berkeley discovered a way to construct YouTube videos from a viewer’s brain activity.

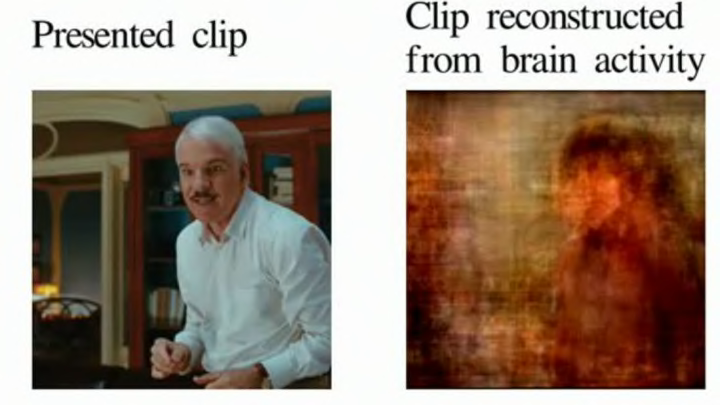

Participants from the study watched YouTube videos inside of a magnetic resonance imaging (MRI) machine. The researchers then collected the data from the MRI scans and reconstructed the videos based on colors, shapes, and movements.

Based on changes in blood flow within the brain, the team could determine if the viewer was looking at an actor’s face or an inanimate object like an airplane. From there, the scientists collected YouTube clips that matched the participant’s brain activity pattern and overlaid the scenes on top of one another. The result was a blurry, surrealistic video that featured ghost-like shapes and movements.

The next step in research was to reconstruct people’s dreams and memories into films. Unlike true visual perceptions from watching YouTube videos, dreams and memories were chosen because they exist independently from reality.

Earlier this year, a team of scientists from Kyoto was able to do just that. In their study, the group of researchers successfully analyzed and recorded the basic elements of participants’ dreams.

Participants were asked to sleep for three-hour time blocks inside of an MRI scanner. As soon as they fell asleep, the scientists woke them up and asked them to describe what they had seen in their dreams. Scientists then picked basic representations of those descriptions from an online image search. Then, participants were asked to fall asleep again. Except this time, the machine would try to match the dreams with a series of images.

Turns out, the machine was correct 60 percent of the time and could accurately map categorical objects to brain activity, thereby creating a video simulation of the dream.

In just a few years, it may be possible to recreate and produce your own dreams into full-length feature films. Who knows—the next blockbuster could come from a simple good night’s rest.