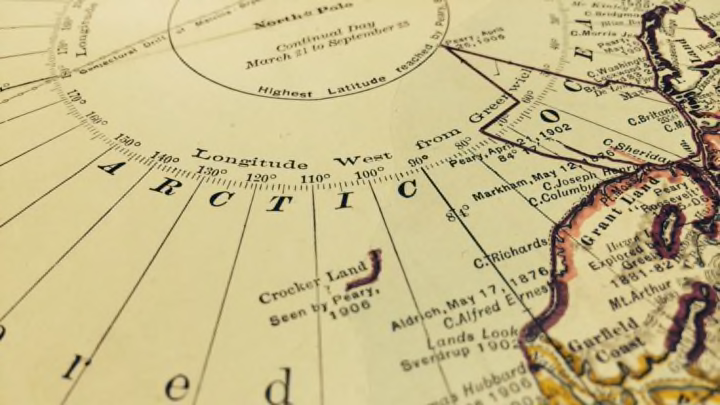

In the archives of the American Geographical Society in Milwaukee lies a century-old map with a peculiar secret. Just north of Greenland, the map shows a small, hook-shaped island labeled “Crocker Land” with the words “Seen By Peary, 1906” printed just below.

The Peary in question is Robert Peary, one of the most famous polar explorers of the late 19th and early 20th centuries, and the man who claimed to have been the first to step foot on the North Pole. But what makes this map remarkable is that Crocker Land was all but a phantom. It wasn't “seen by Peary”—as later expeditions would prove, the explorer had invented it out of the thin Arctic air.

By 1906, Peary was the hardened veteran of five expeditions to the Arctic Circle. Desperate to be the first to the North Pole, he left New York in the summer of 1905 in a state-of-the-art ice-breaking vessel, the Roosevelt—named in honor of one of the principal backers of the expedition, President Theodore Roosevelt. The mission to set foot on the top of the world ended in failure, however: Peary said he sledged to within 175 miles of the pole (a claim others would later question), but was forced to turn back by storms and dwindling supplies.

Peary immediately began planning another attempt, but found himself short of cash. He apparently tried to coax funds from one of his previous backers, San Francisco financier George Crocker—who had donated $50,000 to the 1905-'06 mission—by naming a previously undiscovered landmass after him. In his 1907 book Nearest the Pole, Peary claimed that during his 1906 mission he'd spotted “the faint white summits” of previously undiscovered land 130 miles northwest of Cape Thomas Hubbard, one of the most northerly parts of Canada. Peary named this newfound island “Crocker Land” in his benefactor’s honor, hoping to secure another $50,000 for the next expedition.

His efforts were for naught: Crocker diverted much of his resources to helping San Francisco rebuild after the 1906 earthquake, with little apparently free for funding Arctic exploration. But Peary did make another attempt at the North Pole after securing backing from the National Geographic Society, and on April 6, 1909, he stood on the roof of the planet—at least by his own account. “The Pole at last!!!" the explorer wrote in his journal. "The prize of 3 centuries, my dream and ambition for 23 years. Mine at last."

Peary wouldn't celebrate his achievement for long, though: When the explorer returned home, he discovered that Frederick Cook—who had served under Peary on his 1891 North Greenland expedition—was claiming he'd been the first to reach the pole a full year earlier. For a time, a debate over the two men's claims raged—and Crocker Land became part of the fight. Cook claimed that on his way to the North Pole he’d traveled to the area where the island was supposed to be, but had seen nothing there. Crocker Land, he said, didn't exist.

Peary’s supporters began to counter-attack, and one of his assistants on the 1909 trip, Donald MacMillan, announced that he would lead an expedition to prove the existence of Crocker Land, vindicating Peary and forever ruining the reputation of Cook.

There was also, of course, the glory of being the first to set foot on the previously unexplored island. Historian David Welky, author of A Wretched and Precarious Situation: In Search of the Last Arctic Frontier, recently explained to National Geographic that with both poles conquered, Crocker Land was “the last great unknown place in the world.”

After receiving backing from the American Museum of Natural History, the University of Illinois, and the American Geographical Society, the MacMillan expedition departed from the Brooklyn Navy Yard in July 1913. MacMillan and his team took provisions, dogs, a cook, “a moving picture machine,” and wireless equipment, with the grand plan of making a radio broadcast live to the United States from the island.

But almost immediately, the expedition was met with misfortune: MacMillan’s ship, the Diana, was wrecked on the voyage to Greenland by her allegedly drunken captain, so MacMillan transferred to another ship, the Erik, to continue his journey. By early 1914, with the seas frozen, MacMillan set out to attempt a 1200-mile long sled journey from Etah, Greenland, through one of the most inhospitable and harshest landscapes on Earth, in search of Peary’s phantom island.

Though initially inspired by their mission to find Crocker Land, MacMillan’s team grew disheartened as they sledged through the Arctic landscape without finding it. “You can imagine how earnestly we scanned every foot of that horizon—not a thing in sight,” MacMillan wrote in his 1918 book, Four Years In The White North.

But a discovery one April day by Fitzhugh Green, a 25-year-old ensign in the US Navy, gave them hope. As MacMillan later recounted, Green was “no sooner out of the igloo than he came running back, calling in through the door, ‘We have it!’ Following Green, we ran to the top of the highest mound. There could be no doubt about it. Great heavens! What a land! Hills, valleys, snow-capped peaks extending through at least one hundred and twenty degrees of the horizon.”

But visions of the fame brought by being the first to step foot on Crocker Land quickly evaporated. “I turned to Pee-a-wah-to,” wrote MacMillan of his Inuit guide (also referred to by some explorers as Piugaattog). “After critically examining the supposed landfall for a few minutes, he astounded me by replying that he thought it was a ‘poo-jok' (mist).”

Indeed, MacMillan recorded that “the landscape gradually changed its appearance and varied in extent with the swinging around of the Sun; finally at night it disappeared altogether.” For five more days, the explorers pressed on, until it became clear that what Green had seen was a mirage, a polar fata morgana. Named for the sorceress Morgana le Fay in the legends of King Arthur, these powerful illusions are produced when light bends as it passes through the freezing air, leading to mysterious images of apparent mountains, islands, and sometimes even floating ships.

Fata morganas are a common occurrence in polar regions, but would a man like Peary have been fooled? “As we drank our hot tea and gnawed the pemmican, we did a good deal of thinking,” MacMillan wrote. “Could Peary with all his experience have been mistaken? Was this mirage which had deceived us the very thing which had deceived him eight years before? If he did see Crocker Land, then it was considerably more than 120 miles away, for we were now at least 100 miles from shore, with nothing in sight.”

MacMillan’s mission was forced to accept the unthinkable and turn back. “My dreams of the last four years were merely dreams; my hopes had ended in bitter disappointment,” MacMillan wrote. But the despair at realizing that Crocker Land didn’t exist was merely the beginning of the ordeal.

MacMillan sent Fitzhugh Green and the Inuit guide Piugaattog west to explore a possible route back to their base camp in Etah. The two became trapped in the ice, and one of their dog teams died. Fighting over the remaining dogs, Green—with alarming lack of remorse—explained in his diary what happened next: “I shot once in the air ... I then killed [Piugaattog] with a shot through the shoulder and another through the head.” Green returned to the main party and confessed to MacMillan. Rather than reveal the murder, the expedition leader told the Inuit members of the mission that Piugaattog had perished in the blizzard.

Several members of the MacMillan mission would remain trapped in the ice for another three years, victims of the Arctic weather. Two attempts by the American Museum of Natural History to rescue them met with failure, and it wasn’t until 1917 that MacMillan and his party were finally saved by the steamer Neptune, captained by seasoned Arctic sailor Robert Bartlett.

While stranded in the ice, the men put their time to good use; they studied glaciers, astronomy, the tides, Inuit culture, and anything else that attracted their curiosity. They eventually returned with over 5000 photographs, thousands of specimens, and some of the earliest film taken of the Arctic (much of which can be seen today in the repositories of the American Geographical Society at the University of Wisconsin Milwaukee).

It’s unclear whether MacMillan ever confronted Peary about Crocker Land—about what exactly the explorer had seen in 1906, and perhaps what his motives were. When MacMillan’s news about not having found Crocker Land reached the United States, Peary defended himself to the press by noting how difficult spotting land in the Arctic could be, telling reporters, “Seen from a distance ... an iceberg with earth and stones may be taken for a rock, a cliff-walled valley filled with fog for a fjord, and the dense low clouds above a patch of open water for land.” (He maintained, however, that "physical indications and theory" still pointed to land somewhere in the area.) Yet later researchers have noted that Peary’s notes from his 1905-'06 expedition don’t mention Crocker Land at all. As Welky told National Geographic, “He talks about a hunting trip that day, climbing the hills to get this view, but says absolutely nothing about seeing Crocker Land. Several crewmembers also kept diaries, and according to those he never mentioned anything about seeing a new continent.”

There’s no mention of Crocker Land in early drafts of Nearest the Pole, either—it's only mentioned in the final manuscript. That suggests Peary had a deliberate reason for the the inclusion of the island.

Crocker, meanwhile, wouldn’t live to see if he was immortalized by this mysterious new land mass: He died in December 1909 of stomach cancer, a year after Peary had set out in the Roosevelt again in search of the Pole, and before MacMillan’s expedition.

Any remnants of the legend of Crocker Land were put to bed in 1938, when Isaac Schlossbach flew over where the mysterious island was supposed to be, looked down from his cockpit, and saw nothing.